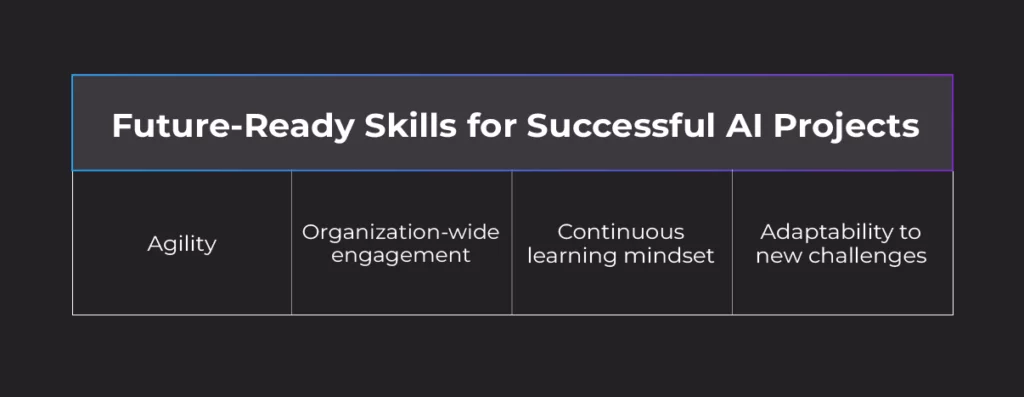

Generative artificial intelligence holds tremendous potential for the financial industry, but its implementation requires a thoughtful approach, grounded in realistic expectations, strong data management, and the adaptation of business processes. Banks that recognize these challenges and embrace AI with a strategic and flexible mindset will be well-positioned to gain a significant competitive edge. The key to success lies in future-ready capabilities such as agility, engagement, and a willingness to learn and adapt to emerging challenges continuously.

Table of Contents

- Data Monetization vs. Quality and Management

- Data: The Fuel Powering Organizations – From Transactions to Interactions

- Implementation Strategy: Agile, Organizational Change, Innovation Culture

- Data Products and the Role of Metadata

Read more articles in this series

Ailleron and Ab Initio Software on AI: Beyond GenAI and Expl…

In the previous two articles, we explored the importance of accountability to both consumers and regulators wh…

Ailleron and Ab Initio Software on AI: Promises vs. Reality …

The Value of Evolutionary AI Implementation in Finance Exaggerated KPIs and the Transition from the “AI Hyp…

Ailleron and Ab Initio Software on AI: From Excitement to Re…

The vast potential of artificial intelligence, especially generative AI, has taken center stage at business an…

Data Monetization vs. Quality and Management

Financial institutions collect and process vast amounts of data every day, yet they often fail to fully tap into its potential on a large scale. There are many possible reasons for this. According to Michał Walerowski, AI & Data Solutions Manager at Ailleron, one of the key issues is the lack of a unified view of data. Stephen Brobst, Chief Technology Officer at Ab Initio Software, adds that data quality—particularly from back-office, for instance, data handled during the processing of loan applications—is often insufficient for effective monetization. To change this tendency, banks would need to implement more rigorous data management systems and automate processes to improve their quality.

It’s worth emphasizing that the data processed by banks is not an exhaustible resource – quite the opposite: the more it is used, the more valuable it becomes, and the more insights it can generate. In this context, generative AI can support banks by automating the creation of data quality rules, enabling more effective data management and paving the way for its monetization.

Data: The Fuel Powering Organizations—From Transactions to Interaction

Economists once referred to data as the oil of the 21st century, but unlike oil, data is a reusable resource. In fact, its value increases the more we use it. To unlock this growing value, banks must bring greater discipline to how they collect, govern, and monitor data, ensuring its origin is traceable and its quality continually refined. Measuring data quality should also extend as deep into the data supply chain as possible.

According to Brobst, most data defects are not the result of IT transformations or technical issues—ineffective business processes typically cause them. That’s why it’s essential to assess data quality at the earliest possible stage. There is a growing market need for tools that can automate data quality management, identify defects, and even carry out automated data correction. Automating these activities is crucial, as measuring and improving data quality manually is very difficult to scale in a large banking environment.

In reality, we can use generative artificial intelligence to create data quality rules just as we can write code or communicate in any foreign language. As for creating data quality rules, at Ab Initio, it’s simply a matter of defining a set of metadata.

Implementation Strategy: Agile, Organizational Change, Innovation Culture

In banking, implementing a project that leverages the capabilities of GenAI is not just a technological challenge—it is, above all, a significant organizational one. Brobst points out that often choosing the right technology turns out to be the easiest part of the process. The real challenge begins after the solution goes live and lies in effectively managing organizational change. Without a well-thought-out transformation of business processes and active engagement from teams across departments, the project risks falling short of its goals.

Agility is, therefore, a key capability that organizations should cultivate to deliver successful projects. Brobst emphasizes the importance of adopting a step-by-step strategy that gradually moves the organization closer to its broader vision. An agile model, based on delivering incremental value, not only accelerates results but also enables better adaptation to the changing business landscape.

According to Walerowski, data-related projects are often perceived by business stakeholders as purely technical initiatives. As a result, business teams tend to be reluctant to get involved and prefer to focus on their day-to-day responsibilities. However, the reality is that without business ownership and active engagement, such projects are unlikely to succeed.

To fully understand the client intentions and unlock the full potential of data for monetization, it’s essential to go beyond analyzing basic banking data. Basic banking data refers to transactional data, which represents the most granular level of detail in traditional banking.

Data Products and the Role of Metadata

Therefore, it’s not just the financial transaction itself that matters, but also all the interactions with the bank that preceded it—searches on the website and mobile banking, exchanges with banking advisors, visits at the branch, and all the actions that led to opening an account or withdrawing a deposit. Before a payment, loan, or other banking product is used, the client makes an important decision. That’s why leading banks ensure their contact centers and channels are fully equipped to monitor and support the entire customer journey leading up to that decision.

It’s essential to connect all these activities along the client-bank interaction chain so that data integration provides far greater insight into consumer behavior and ultimately enables monetization. When considering data monetization, this naturally leads us to a discussion about data-driven products.

To create data products that are interoperable—and therefore capable of offering a more complete view from the perspective of risk, marketing, financing, compliance, and beyond. Brobst emphasizes the critical importance of the data product concept. At the heart of a strong data product lies robust metadata that surrounds and contextualizes the core data. Organizations should make an effort to surface this metadata and ensure it is accessible so that every person in the company, regardless of their role, can view and interpret the data through their lens.